Paper Notes: Offline Reinforcement Learning

Landscape of Open Problems in Offline RL

Challenges of Offline RL

-

There is no possibility of improving the policy using exploration, so it may be impossible to discover better policy if the dataset does not contain transitions with high-reward regions of the state space. - This problem cannot be solved, so it out of scope.

-

Unlike supervised learning, where train and test distribution are assumed to be the same, the whole point of Offline RL is to learn a policy that does something differently from the logged dataset. - This is the problem of distributional shift.

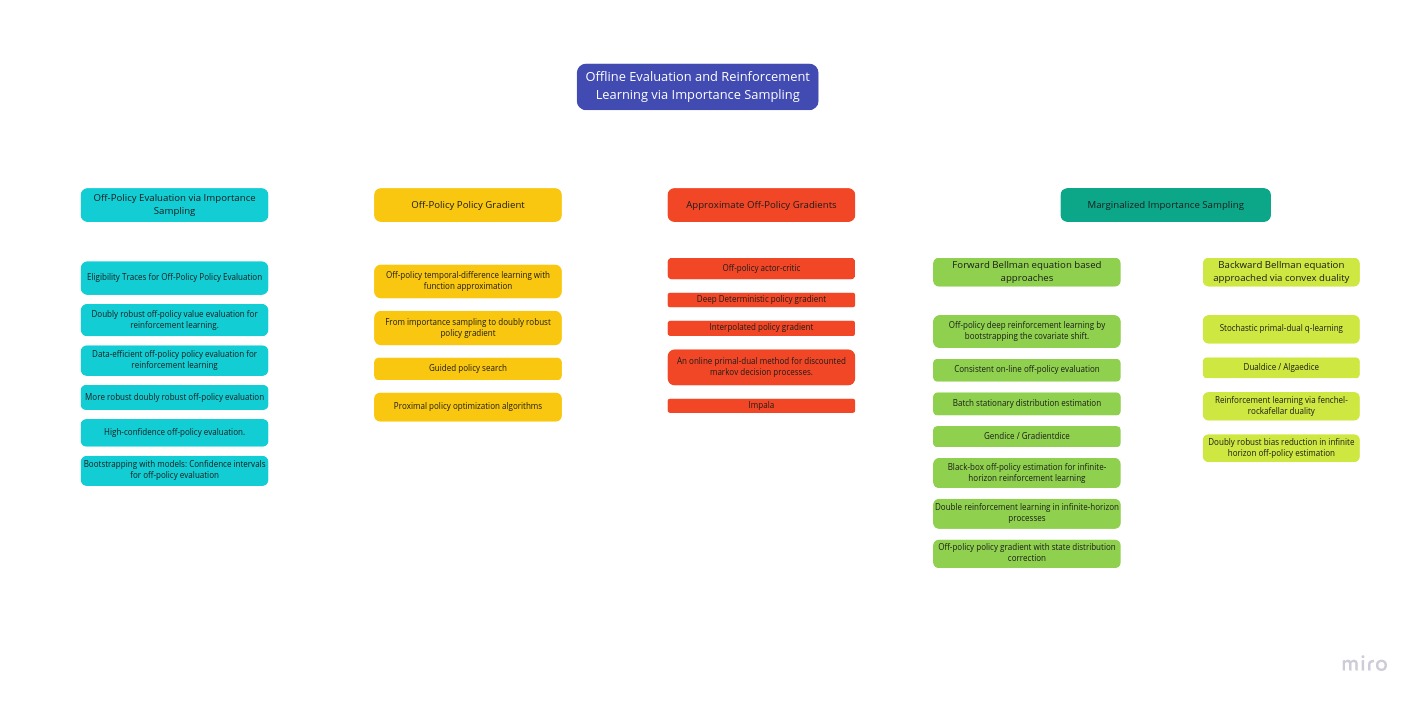

Offline Evaluation and Reinforcement Learning via Importance Sampling

Offline Evaluation via Importance Sampling

- Importance Sampling gives an unbiased estimate of the policy performance but it can have high variance due to the product of importance weights in case of RL.

- Doubly Robust estimator for policy evaluation help reduce this variance by using an approximation of the Q-values in the estimate while still having an unbiased estimate.

- High Confidence OPE approaches provide a lower confidence bound on the expected return of policy in safety-critical scenarios.

Off-Policy Policy Gradient

- Importance Sampling can be used to convert online policy gradient to off-policy gradient algorithms like weighted importance sampling policy gradient estimator.

- Doubly Robust estimators for policy gradient can also be derived from the same by using a baseline for advantage estimates but unfortunately they suffer from high variance to be effective.

- Multiplication of per-action importance weights over the time steps lead to high variance.

- Off-policy algorithms can employ regularization to the learned policy such that it does not deviate far from the behaviour policy. The regularizations can be in form of softmax over importance weights or KL-divergence.

Approximate Off-Policy Gradients

- Approximate importance-sampled gradient can be derived by using the state distribution if the behaviour policy in place of the current policy.

- Although this is a biased estimator of the gradient, it removes the need for importance sampling thereby making it practical.

- This forms the basis of several off-policy actor-critic approaches like DDPG.

- Further improvements can be obtained by introducing control variates and clippingimportance weights to control variance.

Marginalized Importance Sampling

- Another way to avoid bias from off-policy state distribution and variance from per-action importance weighting is the we directly estimate the state-marginal importance ration.

- Calculating the exact ratio is intractable (why?) but methods of estimating marginalized importance ration has been introduced.

- Forward Bellman equation based approaches

- Temporal difference updates can be used to estimate the state-marginal importance ratio under the policy by using tricks like soft-normalization and discounted evaluation.

- Variational power method approach to combine function approximation and power iteration to estimate the state-marginal importance ration has also been studied recently. Similar methods can also be used in off-policy actor critic methods.

- Solving an adversarial, saddle-point optimization is also proposed to obtain the state-marginal importance ratio.

- Approaches applying divergence metric to solve a modified forward bellman equation and constraining the importance ratio to prevent degenerate solutions have also been proposed.

- Backward Bellman equation approached via convex duality

- Application of convex optimization techniques to policy optimization in off-policy setting. Extending these results to practical deep RL settings has proven challenging.

- Devise a convex optimization problem with state-marginal importance ratio as its optimal solution.

- Use of f-divergence as a regularizer between state-action marginal of the learned policy and state-action marginal of the dataset is proposed as an extension of the convex optimization problem.

Challenges and Open Problems

- When behaviour policy is too different from the learned policy, the importance weights will become degenerate and estimate of return will have too much variance. Maximum improvement via importance sampling is limited by:

- suboptimality of behaviour policy

- dimensionality of state and action space

- effective horizon of task

- Most effective off-policy policy gradient methods require estimating value function or state-marginal importance ratio using dynamic programming. Dynamic programming methods suffer from issues pertaining to out-of-distribution queries in offline setting, making it hard to stably learn the value function without additional corrections.